|

|

|

| USC Interaction Lab | My Website |

Motivation

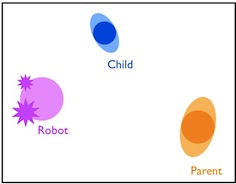

As part of the autism work at the USC Interaction Lab, we have an experiment in which a child, parent and robot are interacting in a small room (see schematic on the right). The room is equipped with overhead cameras, and the robot has two eye cameras. In order to improve the interaction and create more reliable and natural behavior for the robot, it is necessary to be able to track not only the robot itself, but also the parent and child.

Tools and Techniques

For this project, we are using the Robot Operating System (ROS) from Willow Garage. Essentially, ROS is a network of connected nodes which can pass messages through the ROS system. The hardware is a Bandit III robot mounted on a 3-wheeled pioneer robot base. In order to do the filtered tracking of the robot, I chose a Kalman filter (more info). A Kalman filter stores its believe about the state of the world as a gaussian distribution. Uncertainty increases with the movement of the object, and decreases with incoming position measurements. Since it allows the incorporation of robot control data into the predicted position, I chose an extended Kalman filter for the robot tracking, and a linear Kalman filter for a generalized 2D tracker (without control information).

Implementation

I programmed 6 nodes in ROS, 3 which I expect to be used for the project, and 3 for demos and testing.

Project Nodes

WorldToImage

Input: 3D world coordinates and distance from floor to ceiling camera.

Output: 2D image coordinates.

KalmanNode

Input: Measured robot position in 2D overhead image coordinates, angular and linear velocity of the robot

Output: Filtered position in 3D world coordinates

KF2dTracker

Input: Measured position in 2D overhead image coordinates.

Output: Filtered position in 2D world coordinates.

Testing Nodes

KFContOnly

Input: Angular and linear velocity of the robot

Output: Filtered position of the robot (using only control information) in 3D world coordinates.

ImgTestNode

Input: Filtered robot position with measurement, Filtered robot position with control only, Measured robot position, Overhead camera image.

Output: Overhead camera image with representations of filtered robot position, filtered robot position with control only, and measured robot position (see image to the left - position: blue, measurement: yellow, control only: pink).

MovieNode

Input: Images

Output: Saved frames which can be stitched into a movie.

Applications

One fortunate consequence of inaccuracies in the control information available to us is that the filtered orientation is the direction that the robot is going to head, rather than where it is currently facing. We can use that information to have the robot turn its head towards the direction it will be turning, resolving issues we had in earlier experiments where the children experienced distress from not being able to tell where the robot was going to head.

The 2D tracking node can be integrated with additional information about the robot’s position and head information and existing face detection software, allowing us to find a relative angle between the robot and any faces it sees in the scene. This information can then be used to improve localization of the robot, improve localization of the human subjects, or give us additional information to allow us to have the robot’s head track faces in the scene.

Finally, the 2D tracker, besides having the flexibility to apply to 2-dimensional tracking of any object with unpredictable 2D movement, could be used to better track the child when it is close to the robot. This is currently difficult because the background-subtraction algorithm we are using to detect the child becomes confused when the child is too close to the robot.

Continuing Issues

There are several remaining issues in the use of Kalman filtering to track the robot. The control information available to us from the robot is extremely inaccurate. This may be due in part to the fact that the Pioneer robot base is overloaded. Because of this, I tuned the filter to depend heavily on the measured robot position, but this causes a bad measurement value to throw the whole filter off. Finally, when this system is put into use in the actual experiment, we will be using a fisheye lens, which could cause greater problems from radial distortion than we are currently experiencing.