|

Motion Tracking from a Mobile Robot

|

|---|

|

| Motivation |

|---|

|

The populated outdoor environment is fairly challenging to

contemporary mobile robots due to diverse motions of

pedestrians, bicycles, automobiles, etc. Since some objects

move faster than the robot, motion detection and estimation

for potential collision avoidance are the most fundamental

skills that a robot needs to function effectively outdoors.

However, detecting motion of external objects from a moving

robot is not easily achievable because there are two

independent motions involved: the motion of the robot (and

hence the sensors it carries) and the motions of moving

objects in the environment. Unfortunately, those two

motions are blended together when measured through a sensor

such as a camera. In order for a robot to detect moving

objects robustly, it should be able to decompose these two

independent motions from sensor readings.

|

|

| Approach |

|---|

|

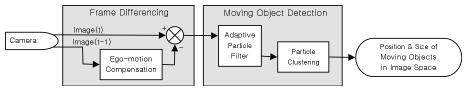

The motion detection process is performed in two steps: the

ego-motion compensation of camera images, and the position

estimation of moving objects in the image space. For robust

detection and tracking, the position estimation process is

performed using a Bayes filter, and an adaptive particle

filter is utilized for iterative estimation.

Figure 1: Processing sequence for motion detection

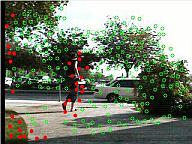

The ego-motion of the camera can be estimated by tracking

features between images. When the camera moves, two

consecutive images, I(t) and I(t-1), are in

different coordinate systems. Ego-motion compensation is a

transformation from the image coordinates of I(t-1)

to that of I(t) so that the two images can be

compared directly. The transformation can be estimated

using two corresponding feature sets: a set of features in

I(t) and a set of corresponding features in

I(t-1). However, since there are independently

moving objects in the images, a transform model and outlier

detection algorithm needs to be designed so that the result

of ego-motion compensation is not sensitive to object

motions.

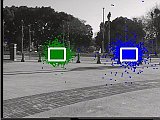

Figure 2: Tracked features (green) and outliers (red)

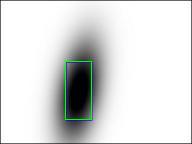

For frame differencing, Image I(t-1) is converted using

the transformation model before being compared to the image

I(t) in order to eliminate the effect of the camera

ego-motion.

|

|

| (a) difference with compensation |

(b) difference without compensation |

|

Figure 3: Results of frame differencing

|

Real outdoor images are contaminated by various noise

sources, eg. poor lighting conditions, camera distortion,

unstructured and changing shape of objects, etc. Thus

perfect ego-motion compensation is rarely achievable. Even

assuming that the ego-motion compensation is perfect, the

difference image would still contain structured noise on

the boundaries of objects because of the lack of depth

information from a monocular image. Some of these noise

terms are transient and some of them are constant over

time. We use a probabilistic model to filter them out and

to perform robust detection and tracking. The probability

distribution of moving objects in image space is estimated

using an adaptive particle, and the final particles are

clustered using a mixture of Gaussians for the position

estimation.

|

|

|

| (a) input |

(b) particle filter |

(c) gaussian mixture |

|

Figure 4: Motion detection procedure

|

Refer to relavant papers for more details.

|

|

| Experiments |

|---|

|

The algorithms are implemented and tested in various

outdoor environments using three different robot platforms:

robotic helicopter, Segway RMP, and Pioneer2 AT. Each

platform has unique characteristics in terms of its

ego-motion. The Robotic Helicopter is an autonomous

flying vehicle carrying a monocular camera facing downward.

Once it takes off and hovers, planar movements become the

main motion, and moving objects on the ground stay at a

roughly constant distance from the camera most of the time;

however, pitch and roll motions for a change of direction

still generate complicated video sequences. The Segway

RMP a two-wheeled, dynamically stable robot with

self-balancing capability. It works like an inverted

pendulum; the wheels are driven in the direction that the

upper part of the robot is falling, which means the robot

body pitches whenever it moves. Especially when the robot

accelerates/decelerates, the pitch angle increases

seriously. The Pioneer2 AT is a typical

four-wheeled, statically stable robot.

|

|

|

| (a) robotic helicopter |

(b) Segway RMP |

(c) Pioneer2 AT |

|

Figure 5: Robot platforms for experiments

|

The performance of the tracking algorithm was evaluated by

comparing to the positions of manually tracked objects.

Refer to relavant papers for more details.

|

|

| Video Clips |

|---|

|

Experimental Platforms

Those video clips show the ego-motion of each platform.

|

|

|

| Helicopter (AVI, 5.7MB) |

Segway RMP (AVI, 15.8MB) |

Pioneer2 AT (AVI, 12.3MB) |

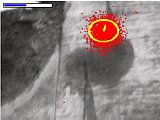

Particle Filter Output

Those video clips show the output of the particle filter.

Red dots indicate the position of particles, and the yellow

ellisoid represents the estimated position (and error). The

estimated velocity (direction and speed) is also shown

using a yellow line inside the ellipsoid. The horizontal

bar on the top-left corner shows the number of particles

being used. The maximum number of particles was set to

5,000, and the minimum number of particles was set to 500.

|

|

|

| Helicopter (AVI, 0.9MB) |

Segway RMP (AVI, 5.2MB) |

Pioneer2 AT (AVI, 4.4MB) |

Multiple Target Tracking

|

Automobiles (AVI, 2.6MB)

This clip shows multiple particle filters tracking

automobiles. It demonstrates how particle filters

are created and destroyed dynamically. Particles

are shown only when a filter converges.

|

|

Pedestrians (AVI, 3.1MB)

This clip shows multiple particle filters tracking

pedestrians. Total four particle filters are

created. The fourth one is not shown in the video

because it does not converge.

|

|

Intersection (AVI, 2.6MB)

This clip demonstrates the stability of multiple

particle filters. There are two people walking in

different directions, and they intersect in the

middle. However, each particle filter succeeds to

track its target continuously.

|

Close the Loop: Follow a Moving Object

|

Follow a person (AVI, 36.5MB)

This clip shows the Segway RMP robot following a person.

When the person enters into the camera field-of-view,

the robot starts to follow him. There are automobiles and

pedestrians in the background, but the tracking system is

not confused by them. When the person stops and stands still,

the robot loses the target and also stops.

However, when the person start to walk again, the robot detects

the motion and start to follow him again.

|

|

|

| Acknowledgements |

|---|

|

This work is supported in part by DARPA grants

DABT63-99-1-0015, and 5-39509-A (via UPenn) under the

Mobile Autonomous Robot Software (MARS) program.

|

|

|

|

|

|